Advancements In Electrical Machine Analysis and Development: Powering the Future

In the intricate tapestry of technological evolution, electrical machines have emerged as the threads binding our modern world together.

3 min read

Sudha Sharma : October 8, 2023

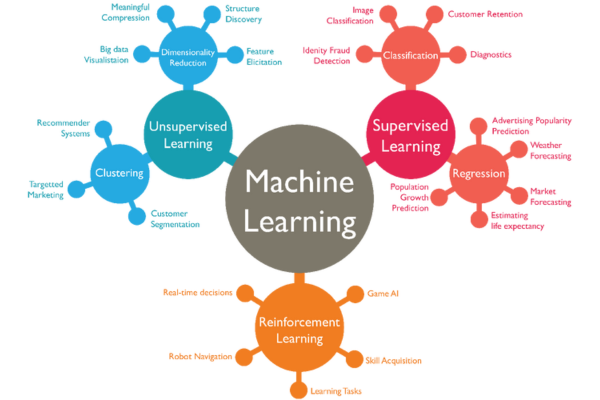

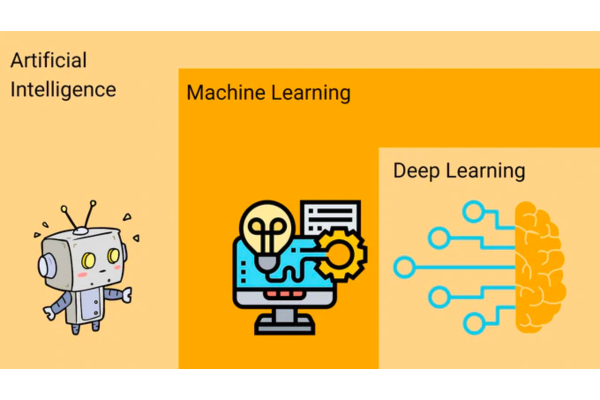

Machine learning has taken the entire world by super storm in recent years, transfigure industries ranging from health protection to financial matters with ease. The ability to bring out invaluable predictions from data has opened up a world of probabilities.

After all, one of the main confrontations within the field of machine learning has always been speed - both in terms of model evolution and deployment.

In this blog, we'll delve into the concept of "Fast ML" and explore various techniques and tools that can speed up the machine learning pipeline.

Before we delve into the solutions, let's first understand why speed is crucial in machine learning.

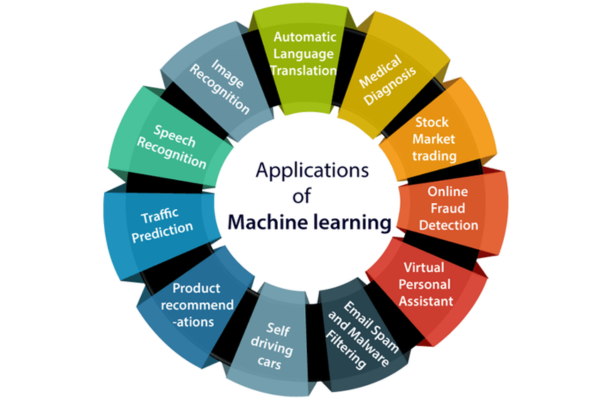

FastML is not just a theoretical concept; it has tangible applications across various industries:

While FastML offers numerous benefits, it also offers several challenges:

In the future, we can expect FastML to continue evolving:

FastML is not just a buzzword; it's a necessity in today's data-driven world. Speeding up the machine learning pipeline from data preprocessing to model deployment has wide-ranging implications across industries, from improving healthcare outcomes to optimizing financial strategies and enhancing customer experiences.

As we continue to advance in the field of machine learning, the pursuit of faster, more efficient techniques and tools will remain at the forefront of research and development.

FastML is not just a means to an end; it's the catalyst that propels us into a future where data-driven decision-making happens at the speed of thought.

In the intricate tapestry of technological evolution, electrical machines have emerged as the threads binding our modern world together.

Concrete has been the backbone of construction for centuries, providing a sturdy foundation for our built environment. But in the quest for...

In an era where speed, efficiency, and sustainability are prized, the transport sector is ripe for a revolution. Enter Hyperloop technology, Elon...